Photo by Hunter Harritt on Unsplash

Delta Lake at the rescue

Explains what is delta lake and how it tackles common challenges of traditional data lakes.

With the exponential growth of data in the current era more and more organizations are moving towards data-driven decision making. Organizations are collecting data irrespective of its type such as structured, unstructured, and semi-structured formats.

Traditional data warehouses only handle structured data but are unable to handle this growing unstructured data. So in 2010 data lake was introduced which provides a storage solution to handle all these different formats.

As Data Lake gained popularity many organizations started facing some common challenges such as

Data Integrity - Due to the lack of ACID properties it was very difficult to ensure data integrity.

Batch and stream processing - To handle both batch and stream processing engineers often have to develop a lambda architecture, which in short is very complex and difficult to maintain. Lambda architecture consists of separate pipelines for batch and stream processing that are created using multiple tools, each system is queried separately and data are combined in a file report by another system.

Versioning - As multiple people are working on the data human mistakes are bound to happen which can lead to bad writes on the disk and without any way to roll back it was difficult to ensure the reliability of data.

To tackle these challenges Delta Lake was developed.

What is Delta Lake?

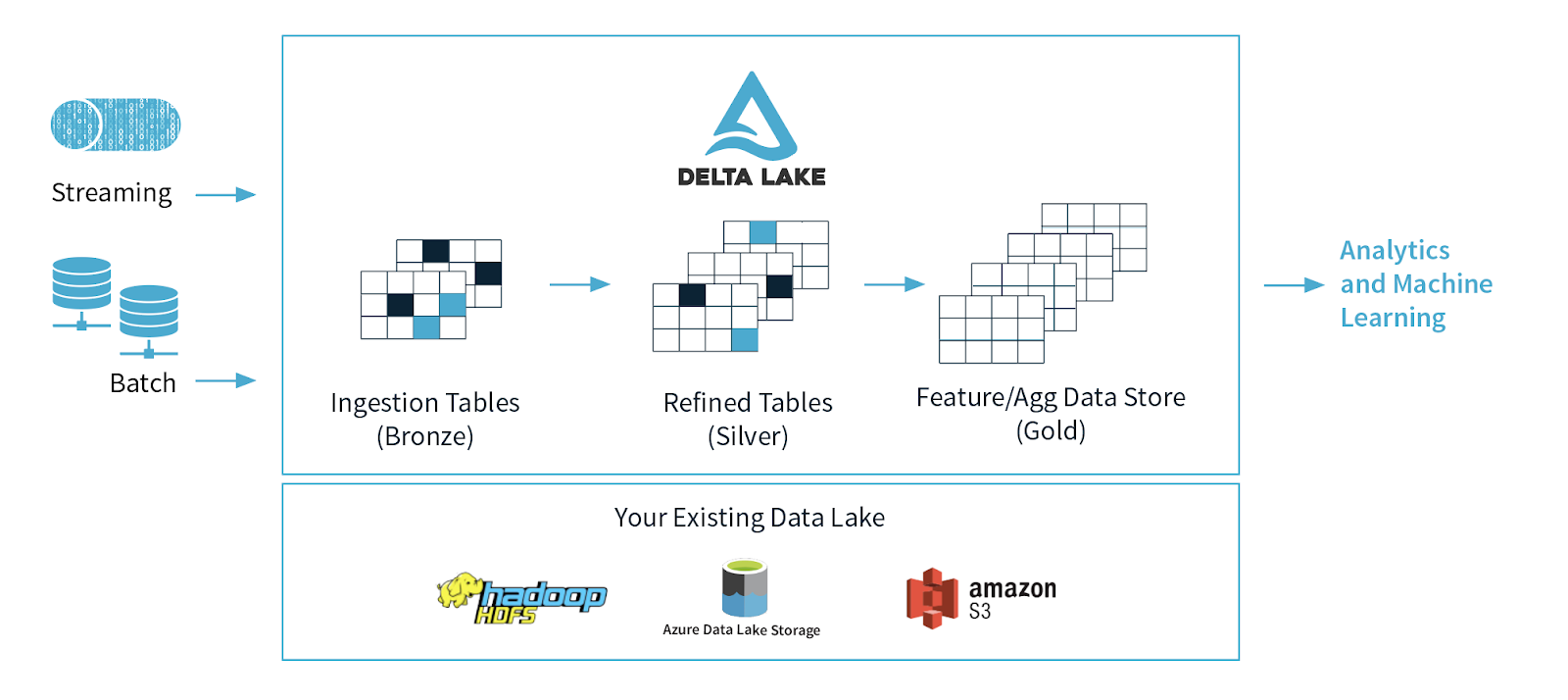

Delta Lake is an open-source storage layer that brings reliability to data lakes. Delta Lake provides ACID transactions, scalable metadata handling, and unifies streaming and batch data processing. Delta Lake runs on top of your existing data lake and is fully compatible with Apache Spark APIs.

How does Delta Lake solve the challenges faced by data lakes?

Data Integrity - Delta Lake format maintains a transaction log of every action performed on the data. Using this transaction log delta lake ensures ACID properties which in turn ensure data integrity.

Batch and stream processing - A table in Delta Lake is a batch table as well as a streaming source and sink. You can load the table with historical batch data and also load real-time stream data into it. Both data can be queried on the same table.

Versioning - Every time a delta table is created/updated a new version is created and the old version is still kept. This feature is also called time travel as it allows you to roll back to the previous version/time of data.

Apart from the above-listed features, delta lakes also provide-

Schema Enforcement - Automatically handles schema variations to prevent the insertion of bad records during ingestion.

Upserts and deletes - Supports merge, update, and delete operations to enable complex use cases like change-data-capture, streaming upserts, and so on.

Scalable metadata handling - As Delta Lake runs on top of Apache Spark, it leverages its power of distributed computing to handle metadata for petabyte-scale tables.

For more information on how to implement it in your use case, you can refer to the official documentation here.

I write about Data Engineering, Programming, and Technology. If you enjoyed this article feel free to follow me and say hi on LinkedIn.